Thanks to new generative AI models, robots no longer simply execute commands. They perceive, interpret and interact with their environment. This spectacular progress heralds the arrival of multifunctional robots capable of performing complex tasks. Here we take a look at these advances and the skills needed to master these technologies.

When generative AI transforms robotics: spectacular demonstrations

The mechanical Turk is back in the age of AI.

In early November, Chinese car manufacturer Xpeng unveiled its humanoid robot Iron. The fluidity of its movements has sparked both fascination and scepticism on social media. Internet users were quick to cry foul, suspecting that a human was hidden inside the machine, much like the Mechanical Turk, the famous fake chess-playing automaton.

To put an end to the rumours, Xpeng responded with a second video. In it, we see an assistant cutting away the layers of fabric covering the robot's leg to reveal its internal mechanisms... with no human hidden inside.

This type of striking demonstration is set to become more commonplace with the advances made in robotics in recent months. As in many other fields, we owe this disruptive leap to generative AI.

But here, the challenge is not to generate text or images. Rather, it is a question of equipping robots with new motor and sensory capabilities.. This enables them to better understand their environment and gain independence and versatility.

©Xpeng

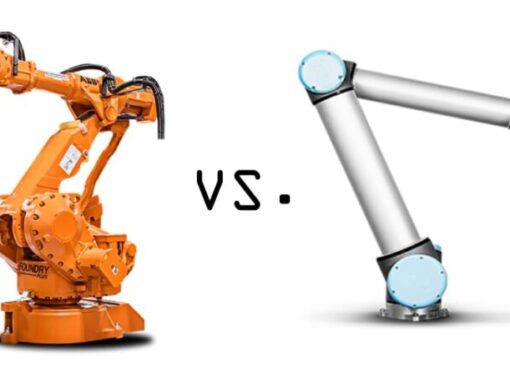

From hand-programmed robots to autonomous models

The limitations of traditional programming

«Currently, programming robots is an extremely complex task., advance Stéphane Doncieux, Director of ISIR (Institute for Intelligent Systems and Robotics) and Professor of Computer Science at Sorbonne University-CNRS. Every behaviour that a robot must perform must be explicitly programmed by an engineer, who must consider each required action, the conditions for execution, and the appropriate reactions in the event of an unexpected event.

This limitation explains why industrial robots are confined to performing the same task over and over again on the production lines of car manufacturers and other manufacturing companies, even though they are theoretically reprogrammable and increasingly collaborative.

More recently, these robots have been equipped with vision algorithms to detect and sort defective parts on a production line. «This use case remains confined to highly controlled contexts., continues Stéphane Doncieux. In this type of scenario, we know precisely the type of parts going in and coming out.. »

Domestic robots: a first step towards autonomy

In the domestic sphere, robot vacuum cleaners and lawnmowers operate in a less controlled environment than in factories. However, the tasks expected of them remain simple enough to be expressed through basic behaviours. When encountering an obstacle, the domestic robot changes course and continues on its way.

The contribution of generative AI to robotics

The new generation of AI-powered robots can perform much more sophisticated tasks. To illustrate the benefits of generative AI, Cédric Vasseur, an expert in artificial intelligence and robotics and trainer at ORSYS, advises his learners to watch the demonstration videos of humanoid robots from Figure AI.

«They demonstrate in concrete terms what can be achieved today, whether in visual or textual recognition.".In one of these videos, we see a robot carefully placing plates, bowls and glasses into a dishwasher.

The contribution of the new VLM and VLA models for robots

To achieve such feats, robotics engineers use derivatives of the famous LLMs.

The VLM (Vision Language Models) enable the robot to link images and language. Whereas VLA (Vision Language Action) transform these perceptions into coordinated movements.

Result : with these models, a robot can now understand a natural language instruction, observe a scene, and then act.

Equipped with multiple sensors – bending, temperature, ultrasonic – 3D RGB-D cameras – combining colour (RGB) and depth (D) data – and lidars, the robot takes in information from its surroundings and adapts its behaviour accordingly.

VLA models then convert this visual and textual data into motor commands. For Stéphane Doncieux, it is a question of extending the promise of generative AI tools to robotics. «The objective is to create models that are sufficiently general to understand an environment, receive instructions in natural language, and enable the robot to perform the actions necessary to accomplish the requested task.»

The robot that loads the dishwasher: an iconic example

The demonstration video above of Figure AI shows a robot capable of handling plates, glasses, or cutlery with surprising precision.

What may seem trivial to a human – loading a dishwasher – is actually very complex for a robot. « Grasping and manipulating an object involves not only vision, but also anchoring in the robot's body, requiring sufficient manual dexterity, potentially tactile feedback, and many other factors that are far more complex than a strategy game. »The machine can beat humans at chess and Go, but it has the greatest difficulty grasping and opening a water bottle," continues Stéphane Doncieux.

It is the famous Moravec's paradox which boils down to the idea that «the most difficult thing in robotics is often the easiest thing for humans».

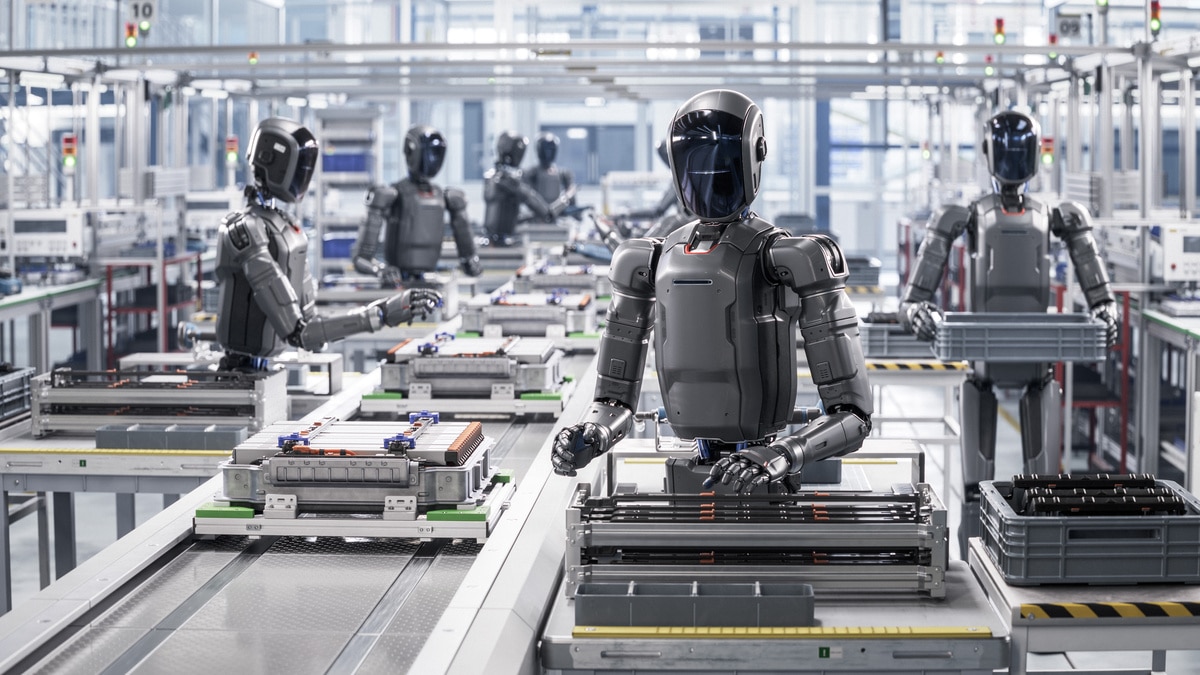

©Tesla

Towards fundamental models... for robots

The era in which each robot had to learn on its own is coming to an end. New models known as «fundamental» are emerging, capable of ingesting the experience of multiple machines.

Generic VLAs such as RT-2 — soon to be superseded by larger versions — or Isaac GR00T from Nvidia, or even Helix Figure AI, extend capabilities by drawing on data from a multitude of environments.

Open models such as OpenVLA complement this ecosystem, allowing adaptation to various arms, mobile platforms, or humanoid prototypes.

The idea is simple: instead of training a robot, we train the skill itself, then distribute it from one robot to another..

Data, simulation and world models: the new fuels of this revolution

A lack of robotic data

Training models to cope with the extreme variability of the real world also requires a considerable volume of data. Developed by Boston Dynamics, Google, Xpeng and Nvidia, VLA models comprise billions of parameters that need to be fed.

However, while «traditional» generative AI models have extensive access to text corpora and image banks, robotic data is much more limited.

To compensate for this shortfall, Robotics engineers are building large multi-robot datasets., for example, resulting from collaborations between laboratories and manufacturers. Another solution is to make extensive use of synthetic data generation via physical simulators and world models capable of creating virtual environments almost infinite, such as Genie 3 by Google DeepMind or Nvidia's simulation tools.

The idea is to expose the models to a variety of situations that cannot be reproduced solely with physical robots in laboratories.

Many challenges to overcome in the real world

Other challenges must be overcome, this time in the real world. Controlling robotic movement is a delicate art.

While it is easy to instruct the robot to position its arm at the cap of a water bottle, asking it to turn the cap with its gripper requires applying a certain amount of pressure at the right angle. « Everyday movements require control methods that involve more than just position, making movement control more complicated than it appears., Stéphane Doncieux points out.

For his part, Cédric Vasseur warns against the illusion created by certain demonstration videos: they are filmed in a studio with optimal lighting, without any disruptive elements that could interfere with the robot or its vision system. « The objects handled have highly contrasting colours to make them easier for AI to identify. »The robot could also be trained for a specific environment. To return to the example of the dishwasher, a robot spent hours learning how to empty it in a specific kitchen. But if you move it to another kitchen, it has to start all over again.

Security, ethical and regulatory issues

Physical security and cybersecurity

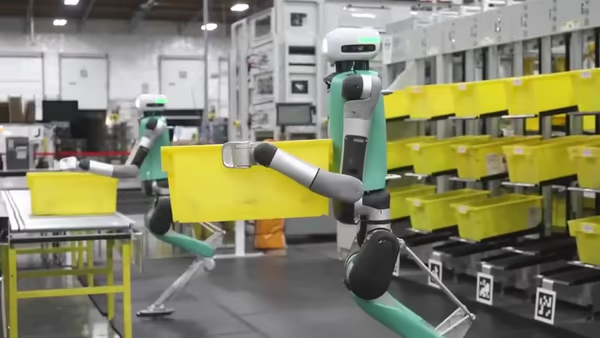

Before domestic robots are adopted in our homes, industry will be the first area of implementation, according to Cédric Vasseur.

" Many projects are already underway in Europe, particularly in Germany, where large industrial groups are working on the use of humanoid robots. In addition, e-commerce players such as Amazon and Alibaba are investing heavily in the creation of fully automated warehouses based on robots, conveyor belts and automated conveyors.

©Agility Robotics

The production of this type of advanced robot raises a number of risks. First and foremost, safety risks. By becoming communicative, the machine increases its attack surface. " If a robot responds to any voice command, it could harm others and perform potentially malicious or illegal acts. » warns Stéphane Doncieux. In addition to these cyber risks, there are also unpredictable physical risks. The robot is exposed to all the hazards of the real world and could be subject to acts of sabotage.

Ethics: responsibility and human skills

Our two experts also raise ethical and social issues that would result from the widespread use of AI-powered robots. Stéphane Doncieux discusses a loss of responsibility – faced with tools that seem to be relevant most of the time, their users may be tempted to let them decide autonomously, without taking responsibility for the decisions made – and, as a result, a loss of skills.

It will also be necessary to deal with employees' expectations regarding the conditions under which these robots are introduced into their workplace.

Regulation: the AI Act

Regulation must also be taken into account, with emerging regulatory frameworks, whether in terms of machine safety or specialised regulations on AI. In Europe, with the AI Act, robots equipped with advanced AI become high-risk systems. This status imposes strict discipline: audits, comprehensive documentation, model transparency, continuous monitoring and enhanced cybersecurity.

This is not an artificial constraint: the more robots learn, the more they must prove that they remain reliable, predictable and secure.

What training courses are available for advanced robotics?

The robotics engineer of 2025 no longer looks the same as the one from 2015. They know ROS 2 like the back of their hand, understand advanced industrial vision, and handle simulators such as Isaac Lab with the same ease as a supervisor handles their pressure switch. They also know how to train an imitation model, interpret the outputs of a vision system, and diagnose a learning failure.

Programming remains essential, but it is no longer central: what matters now is the ability to orchestrate complex pipelines where perception, AI and mechanics must interact seamlessly.

«To work on this type of project, it is important to be versatile and to develop a wide range of skills combining robotics and artificial intelligence.», supports Cédric Vasseur.

ORSYS training courses

ORSYS offers state-of-the-art seminars for this purpose. Aimed at decision-makers and project managers, they provide an overview of the latest advances in the field.

This is the case for the training course «Robotics, state of the art» (ROB), which reviews past, present and future applications of robotics and the contributions of AI.

For decision-makers and project managers, another seminar presents the generative AI solutions available on the market and their uses (IAP). There is also a more specific training course on AI and robotics in healthcare (IAZ).

For more technical profiles, there are workshops that focus on technologies such as ROS (Robot Operating System), which enables the creation of robotic applications (ROH), or the workshop training course «Artificial intelligence, useful algorithms applied to robotics» (IAG). These courses provide a practical understanding of how to exploit new VLM/VLA models, simulation and open source tools in real projects.

«Mastering programming remains essential., continues Cédric Vasseur. Regardless of the nature of the robot developed, it will need to be programmed at some point.. Knowledge of 3D design is also essential, both for the design itself and for understanding the physical constraints to which the robot will be subjected.

Furthermore, project management is a key skill. «A robotics project is complex.", adds the trainer. It involves different trades and the coordination of numerous components and peripherals.» When it comes to the purely robotic aspect, it is essential to have both mechanical and electronic skills. « Some operations are done by hand when it comes to soldering or designing your own electronic circuit.»

Security: for robots, but also for AI

For the safety component, Cédric Vasseur leads a one-day training module focused on artificial intelligence and operational safety (ICY). Participants learn how to protect themselves against new threats related to AI, both in the virtual and physical worlds. Finally, the expert co-authored a white paper, published by ORSYS, on AI and cybersecurity to explore this topic in greater depth. In a context where robots and generative AI are converging, this security expertise is becoming central to the design of systems.

Robots powered by generative AI no longer simply execute commands; they make decisions and adapt. It is now up to us to understand their limitations, ensure their safe use, and train ourselves to maintain control over this new industrial power.

©UBTech