Using artificial intelligence in business communications is no longer an option, but a reflex. But you need to do so without risking your credibility, your compliance... or your reputation. Between the coming into force of the AI Act, the rules imposed by platforms and the abuses already observed, how can AI be used in a professional, responsible and transparent way? Frédéric Foschiani, an expert in social media and AI for communication, explains the precautions that need to be taken to take advantage of AI without losing control of your communication.

Writing a post in a matter of seconds, generating an original visual, analysing sector trends... Artificial intelligence has become the favourite assistant of communicators. But behind the promise of efficiency lies a more complex reality: responsibility. Every piece of content generated, every piece of data used or every image modified now entails an obligation of transparency and exposes us to legal and confidentiality risks.

The entry into force of the European regulation on AI, the’AI Act, and the new rules imposed by the platforms are profoundly changing practices. So the question is no longer should AI be used? But how can it be used professionally and responsibly? Between compliance, transparency and credibility, the stakes are clear. It is a question of make AI a lever for quality, not a confounding factor.

Understanding the new framework: the AI Act and its impact

The AI Act in practice

Coming into force on 1ᵉʳ August 2024, the European AI Act is the first comprehensive, cross-cutting and legally binding regulation exclusively dedicated to AI. Its principle? To classify AI systems according to four levels of risk (unacceptable, high, limited and minimal), with graduated obligations, particularly in terms of transparency for certain uses. Some of these provisions have already been in force since February 2025. These include the ban on AI systems presenting unacceptable risks and the requirement for an «AI culture» (AI literacy) provided for in Article 4 of the AI Act.

Examples of unacceptable risks

Cognitive and behavioural manipulation of people

AI systems that :

- exploit psychological or cognitive flaws

- manipulate behaviour without the person being aware of it

- may cause physical or psychological harm

👉 Example: an AI that subtly pushes a vulnerable individual (child, fragile person) to adopt dangerous or self-destructive behaviour.

Exploitation of vulnerable people

Ban on AI that specifically targets :

- children

- people with disabilities

- socially or economically vulnerable individuals, with the aim of influencing their decisions or behaviour

👉 Example: an intelligent toy using AI to encourage a child to buy a product or adopt a specific behaviour.

Social scoring the Chinese way

Systems which :

- assess or rate individuals on the basis of their behaviour

- cross-reference social, personal and behavioural data

- lead to sanctions, exclusion or discrimination

👉 Example: assigning a «citizen rating» influencing access to housing, a job or a public service.

Real-time remote biometric identification (with very limited exceptions)

General ban on real-time facial recognition in public spaces for mass surveillance purposes.

⚠️ There are very limited exceptions for law enforcement officers (terrorism, missing persons, etc.), subject to strict judicial control.

👉 Example of a ban: automatically scanning faces in a street or station to identify individuals without a specific legal basis.

L'AI literacy concerns all those who use AI in a professional context: communication, marketing, HR, legal, finance, etc. It is not limited to social networks. It is not a technical certification, but an obligation of means. Organisations need to ensure that their teams understand the capabilities, limits and risks of AI, as well as good practice, particularly in terms of content reliability, bias and reputational impact.

The specific requirements for general-purpose AI models have applied since August 2025. The majority of the requirements, particularly with regard to transparency, will come fully into force. 2 August 2026. Certain requirements are then phased in until 2027.

For communicators, this text is interesting. It highlights a risk linked to transparency in content creation and online communication. Key points: the AI Act imposes a strengthened governance framework, including transparency, traceability and accountability obligations.

Lack of transparency: a legal and reputational risk

Sections 50 and 99 of the AI Act lay down two major principles.

👉 Informing the public . When content has been generated or modified by an AI, particularly in the case of realistic visuals, deepfakes or synthesised voices.

👉 Penalising breaches. Up to €35 million or 7 % of worldwide turnover for certain offences (e.g. prohibited practices) and up to €15 million or 3 % of turnover for others, including transparency obligations.

In practical terms, publishing a visual generated or modified by AI without mentioning it can trigger a risk of non-compliance (AI Act and platform rules), especially if the content is realistic and likely to mislead.

For companies, this means a new editorial responsibility. They must be able to prove the origin of content and assume its nature.

These transparency obligations will be fully applicable from 2 August 2026. Until then, companies must anticipate these requirements through their own reporting and labelling systems.

Labelling consists of informing the public that content has been generated or modified by an AI, where this information is necessary to avoid any confusion with authentic content. It is a tool for editorial governance and regulatory compliance.

RGPD, CNIL and ethical consistency

CNIL, In its 2025 recommendations, the European Data Protection Supervisory Authority (EDPS) reiterates the importance of informing individuals and enabling them to exercise their rights effectively in the context of AI models, in line with the GDPR.

There is more at stake than just the legal aspect: it affects the digital confidence.

A communication produced or assisted by AI must comply with the same requirements as any other data. These requirements concern veracity, respect for consent and absence of manipulation. This is where the difference lies between responsible and careless use.

Platforms take action: transparency and control

LinkedIn: using content credentials to make the origin of content visible

LinkedIn relies on the C2PA (Coalition for Content Provenance and Authenticity). This standard was created by a consortium of tech and media companies (Adobe, BBC, Microsoft, Intel, etc.). It displays provenance information in the metadata of multimedia files.

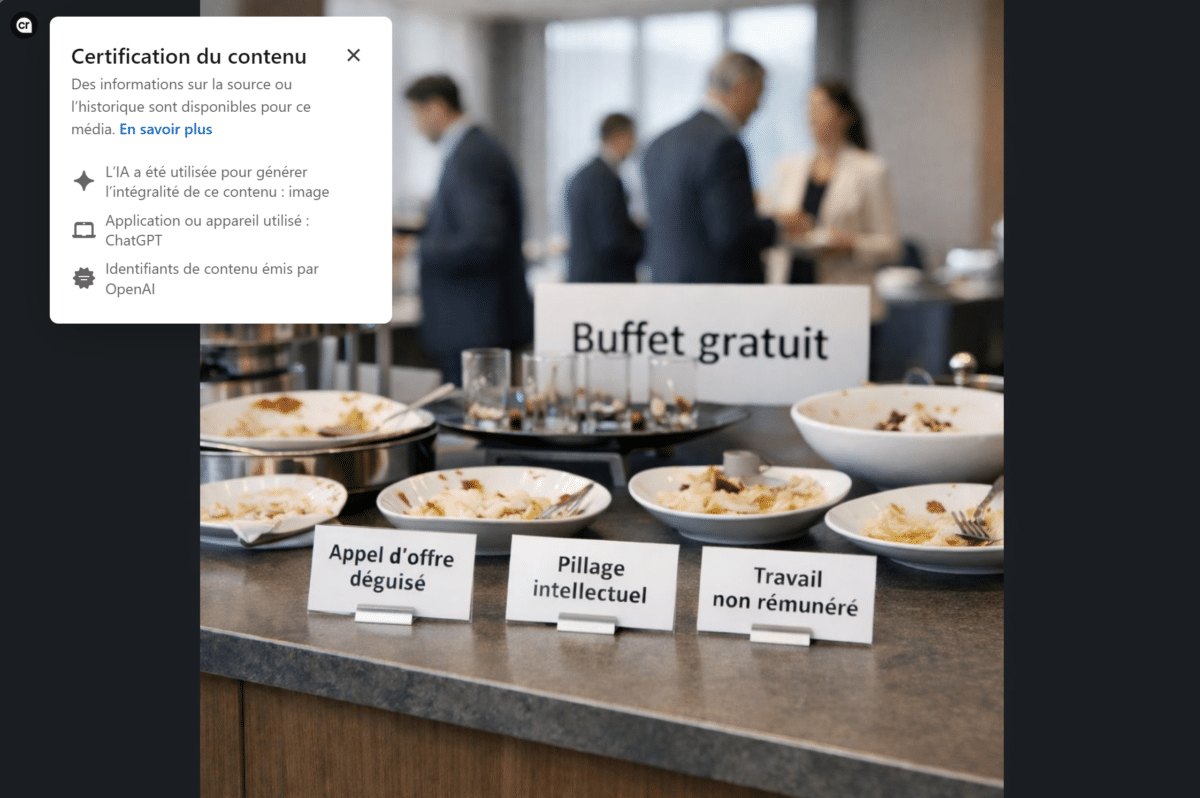

These «content credentials» indicate whether an image has been generated or modified by AI. In some cases, they can be used to trace the editing history (author's name, AI platform used). Every image or video generated by AI can now be automatically tagged, indicating its origin or modification.

This initiative marks a fundamental change. Professional social networks are becoming the guarantors of the reliability of publications, as required by the AI Act. This information is not always visible by default. Nevertheless, it sends out a strong signal in terms of transparency and editorial responsibility on a professional network.

For communicators, this reinforces the need to control the traceability of visuals and avoid any ambiguity about the nature of the content published.

Meta and YouTube: labelling becomes the norm

Meta (Facebook, Instagram, Threads) has announced the automatic labelling of images created by AI using embedded metadata (C2PA and IPTC).

These transparency measures are designed to inform users about the origin of content. They also aim to build trust, in line with new regulatory requirements and social network policies.

Meta also changed the terminology of its labels in 2024 to distinguish more clearly between content «created with the help of AI» and that «significantly altered». The stated aim is to make the label easier to read for users, while relying on provenance signals rather than a simple manual declaration.

YouTube, for its part, now requires the disclosure of significantly modified or AI-generated content when it appears realistic, in particular if it represents credible people, events or situations.

This obligation explicitly concerns professional use and is designed to limit the risks of deception or misinformation.

The aim of these practices is not to restrict creativity, but to maintaining trust. Transparency is becoming a new communication standard. It's better to display the origin of content than to leave it in doubt.

TikTok: self-declaration and automatic detection

TikTok has also adopted the C2PA standard and introduced automatic labelling (auto-flag) IA videos imported from other platforms. Content generated by its own tools is automatically tagged. This filtering is designed to combat false information, but also to protect brands. Because on a network where perceived authenticity is the value of a message, an error in labelling or an overly artificial visual can quickly undermine credibility.

How to identify AI-generated content

Standards of origin such as C2PA or metadata IPTC play a key role in identifying content generated by AI. They make it possible to integrate information on the origin of a file, the tools used or the modifications made. However, these devices are not infallible. Metadata can be deleted during re-export, and not all tools implement them in the same way.

In other words, the metadata IPTC/C2PA help to identify the origin of content. But they can never replace editorial governance or internal reporting systems.

*IPTC (International Press Telecommunications Council) is an international body that defines the metadata standards used by the media, press agencies and digital platforms, integrating information such as author, date and place of creation, rights of use and, more recently, indications linked to content generated or modified by artificial intelligence, directly into files (images, videos, documents).

Integrate AI into your strategy without risking your reputation and confidentiality

AI as an ally: optimising without taking away responsibility

Beyond the creation of visuals and videos, AI can be a formidable tool for the sector watch, there writing posts or the creative idea generation. But she doesn't think, check or understand the context.

Before any publication, four reflexes must become systematic:

- Never enter personal data or confidential information (customers, HR, contracts, financial data, strategy, etc.) in an AI tool that has not been validated by the company, including for reformulation purposes.

- Requiring AI to cite its sources (articles, studies, figures)

- Cross-checking and verifying data provided by AI

- Adapting the tone and the message the company's positioning

An AI can suggest a viral trend... but only a communicator knows if it really serves the strategy.

Expert voice - Frédéric Foschiani

«I regularly use AI as an aid to monitoring and editorial ideation. But one rule never varies: no data, no figures, no quotes should be used without human verification. AI can suggest angles or trends, but all too often it produces content that is credible... but false. The Deloitte example illustrates this risk perfectly. In a professional context, publishing erroneous information will engage the responsibility and credibility of its author and his company».»

Formalise an internal user charter

Implementing an internal AI charter is now good practice, as recommended by Article 4 of the AI Act (AI literacy).

In particular, it must specify the :

- authorised tools

- acceptable uses

- confidentiality rules applicable to the use of AI tools

- traceability and labelling rules for content generated or modified by AI

- mentions to be displayed when content is generated or modified by an AI

- need for systematic human review

Some companies have already formalised this framework, going so far as to include a «disclosure IA procedure» for their publications, particularly on social networks.

This internal system aims to inform the public or users when content has been generated or modified by artificial intelligence, to avoid any confusion with human production or authentic information. This type of initiative demonstrates a mature approach: technology is integrated into practices, but never used without control.

Expert voice - Frédéric Foschiani

«This need for a framework is not theoretical. It emerges very concretely from the field. When I provide support, I find that the most frequent mistake is not the use of AI, but the absence of a framework. More and more companies are making internal AI solutions available to limit the risks associated with disseminating sensitive or confidential data outside the organisation. However, these tools are often deployed without any real support for the teams in terms of best practice. Employees have the technology, but not always the guidance they need to use it effectively and responsibly. This applies as much to mastering prompting - knowing how to formulate a clear request so as to obtain a result that can be used quickly - as it does to understanding the limits of AI, its biases or the regulatory obligations to be respected in a professional context, particularly in terms of transparency and traceability of content. Without this framework, AI becomes an under-exploited tool or, conversely, an avoidable risk factor for a company's communication and reputation».»

Anticipating communication errors and crises

Recent history is full of examples where AI has betrayed the communication it was intended to serve. For example, a company published an AI-generated image of a medical team to illustrate a campaign. Internet users quickly detected the artificiality of the image: anatomical errors, blurred faces and inconsistent details. As a result, the company was accused of misrepresenting and exploiting human beings. Even outside social networks, this type of error is a reminder of the need for vigilance.

October 2025, Deloitte has been forced to reimburse the Australian government after submitting a report riddled with AI-generated errors. False references, inaccurate legal citations and inconsistencies were found, attributed to the uncontrolled use of generative AI tools. This example illustrates a key point: an error generated by AI always becomes a human error.

Actions to be planned :

- training in the proper use of AI to understand biases and risks

- mastering prompting to be as precise as possible in the instructions given to the AI

- institute a strict validation protocol (proofreading, checking sources, consistency tests)

- establish a crisis response plan, including clarification messages, public rectifications and transparency about the origin of the error

- keeping and archiving a register of AI-generated content to ensure traceability

An AI error, whether it's internal or external, on a website or in a post, sooner or later always becomes an error, a communication error. Control and reaction processes are not an option: they are the safety net of any responsible AI strategy.

Credibility, quality and SEO: the new trust indicators

Evaluation of content by search engines

As Google has reminded us, it's not the method of creation that counts, but the value provided. AI content is evaluated in the same way as other content, according to the E-E-A-T logic: experience, expertise, authority and reliability. Content generated by AI can therefore be well referenced, provided that it is verified, contextualised and enhanced by human expertise.

The challenge for communicators is no longer to avoid AI, but to frame it so that it can contribute to the production of high added-value content.

Transparency and reputation

Mentioning that AI has contributed to the creation of a piece of content does not diminish trust - on the contrary. This transparency becomes a serious marker and ethics. A company that hides its use of AI today takes more risks than it avoids. Transparency, verification and human control remain the pillars of responsible communication enhanced by AI.

The double look

Human proofreading remains the best guarantee of credibility. It allows us to correct approximations, validate information, adapt the tone, avoid bias and guarantee consistency with the overall strategy. This dual approach - AI for productivity and human for reliability - defines the new frontier of responsible communication.

7 reflexes for responsible communication with AI

- Check sources, figures, quotes, etc. generated by the AI before broadcasting.

- Mention the use of AI when it has participated in the creation of a text, visual, audio or video.

- Systematic human proofreading to validate tone, content and consistency.

- Update your internal IA charter in line with legal and technological developments.

- Monitoring public reaction to anticipate misunderstandings or criticisms linked to transparency.

- Never expose confidential information or personal data in an AI tool not validated by the organisation.

- Keep the proof (register) of the use of AI.

Get trained!

Understanding the regulatory frameworks, mastering AI tools and preserving the credibility of your communication requires ongoing skills development. ORSYS offers several training courses specifically designed to support communicators and community managers in these new uses.

- Community management: boosting your organisation and communication with AI

- The essentials for improving your presence on social networks with AI

- AI to create compelling content (text, images, video)

These courses are part of the overall offering of ORSYS IA Academy and corporate communication training.

AI is not a substitute for communication; it reveals its flaws and strengths. Used methodically, it becomes a tool for anticipation, creativity and efficiency. Without a framework, it can lead to confusion and even a loss of trust. Tomorrow's communications will be hybrid: human in intent, augmented in form. And the difference between innovation and imprudence will depend on the lucidity of professionals.